"Amazon to add Postgres to its most-favored database list" says GigaOM:

"To many this is no-brainer. Amazon wants to support the databases that its developer audiences want to use. This is simply a case of Amazon responding to user demand and oh-by-the-way making its cloud infrastructure more attractive to a specific target audience. Some say Postgres has gained traction since Oracle’s acquisition of MySQL via its Sun buyout a few years back."

Some people I know said "yea, the writing was on the wall...". Well, was it?? Really?

AWS finally got the time to "plan" for supporting Postgres now? After supporting MySQL, Oracle and SQL Servers for almost 3 years?! Writing was on the wall? Where can I find a wall this old?

PostgreSQL has not picked up.

This is why it is a far 4th on Amazon's list. The writer of the text above also makes clear efforts not to pick a side here... "to many this is a no-brainer" or "some say Postgres has gained traction".

It has been around for ages, thru many "oh! it's now happening!" events, such as the acquisition by of MySQL by Sun, then by Oracle...

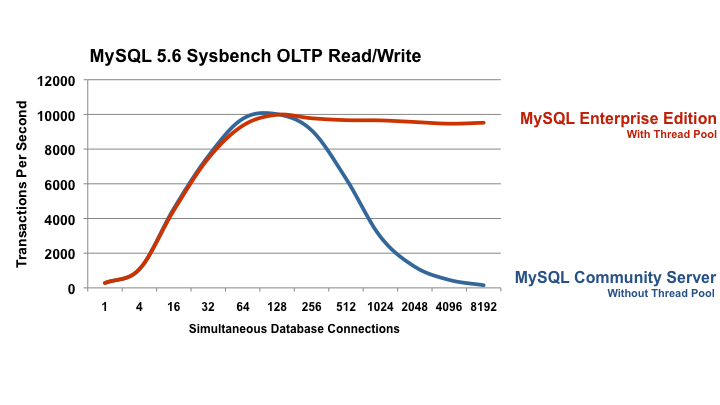

Technically, PostgreSQL's few superior capabilities, especially around schema online modifications (which gets more important these days!), probably could not change its fate, and it's still being held back by too many inferior capabilities, around performance, robustness, ecosystem...

So - with plans for RDS, will Postgres now pick up?

Feel free to Share your thoughts...